Introduction

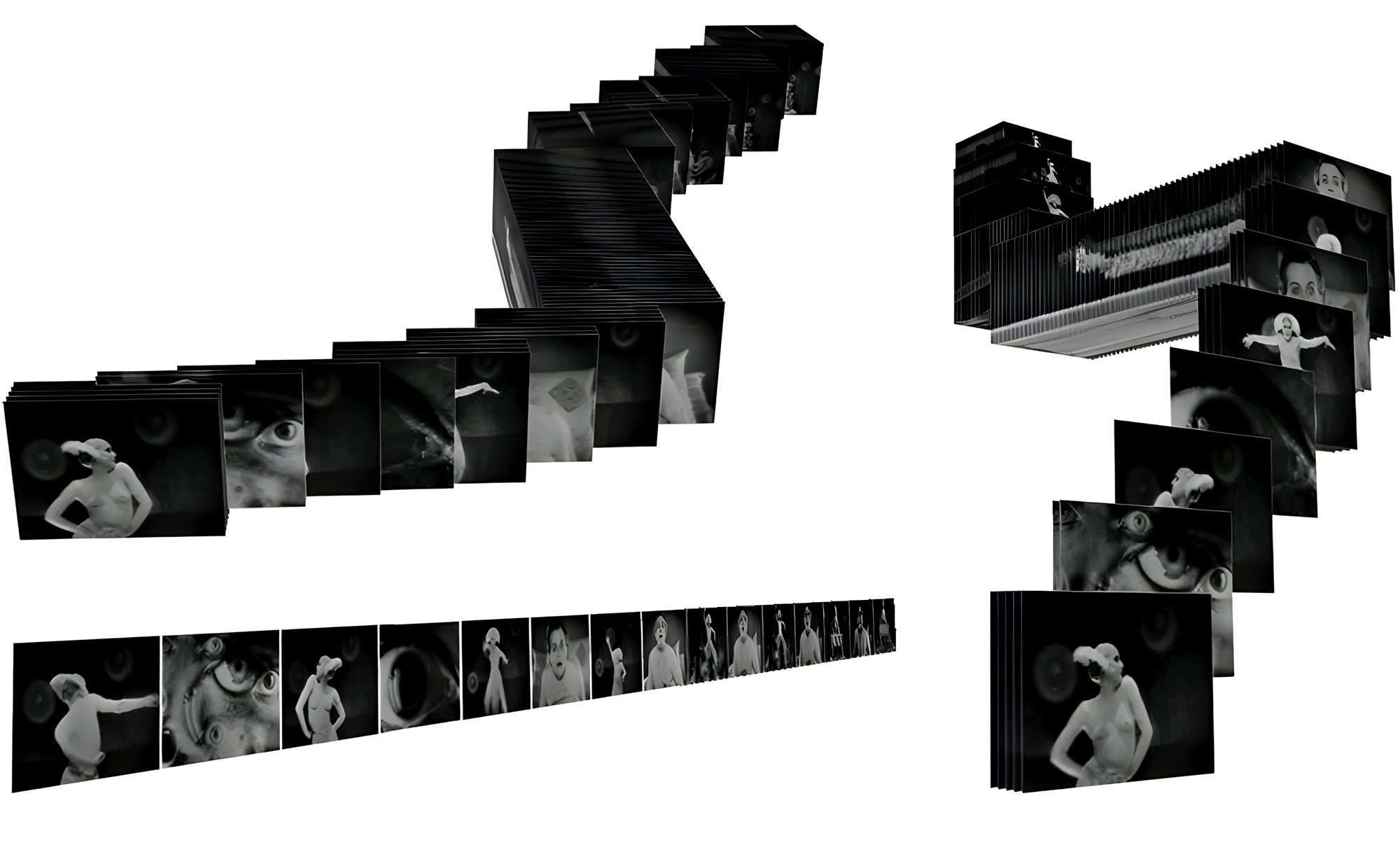

Tools for analyzing film sequences

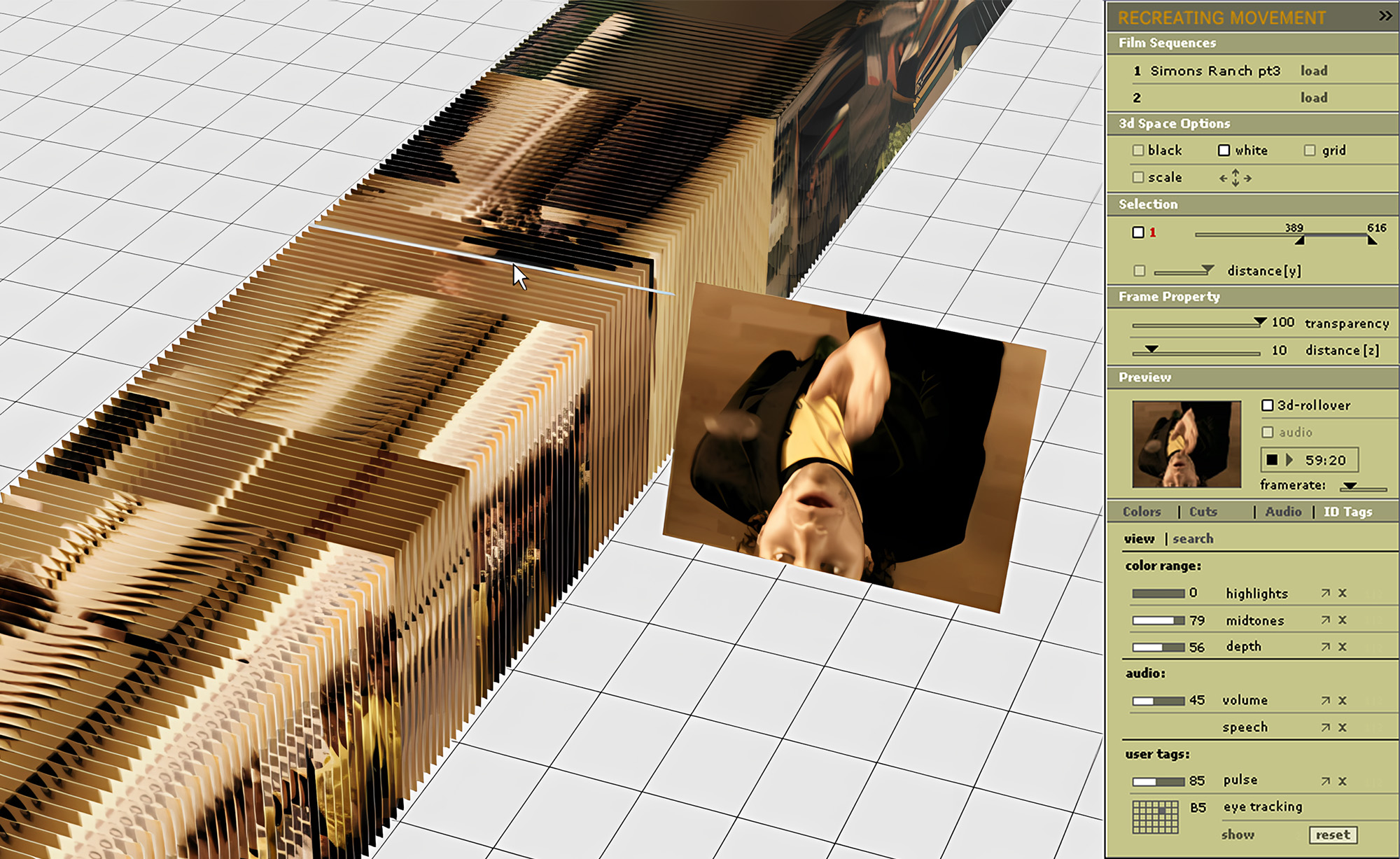

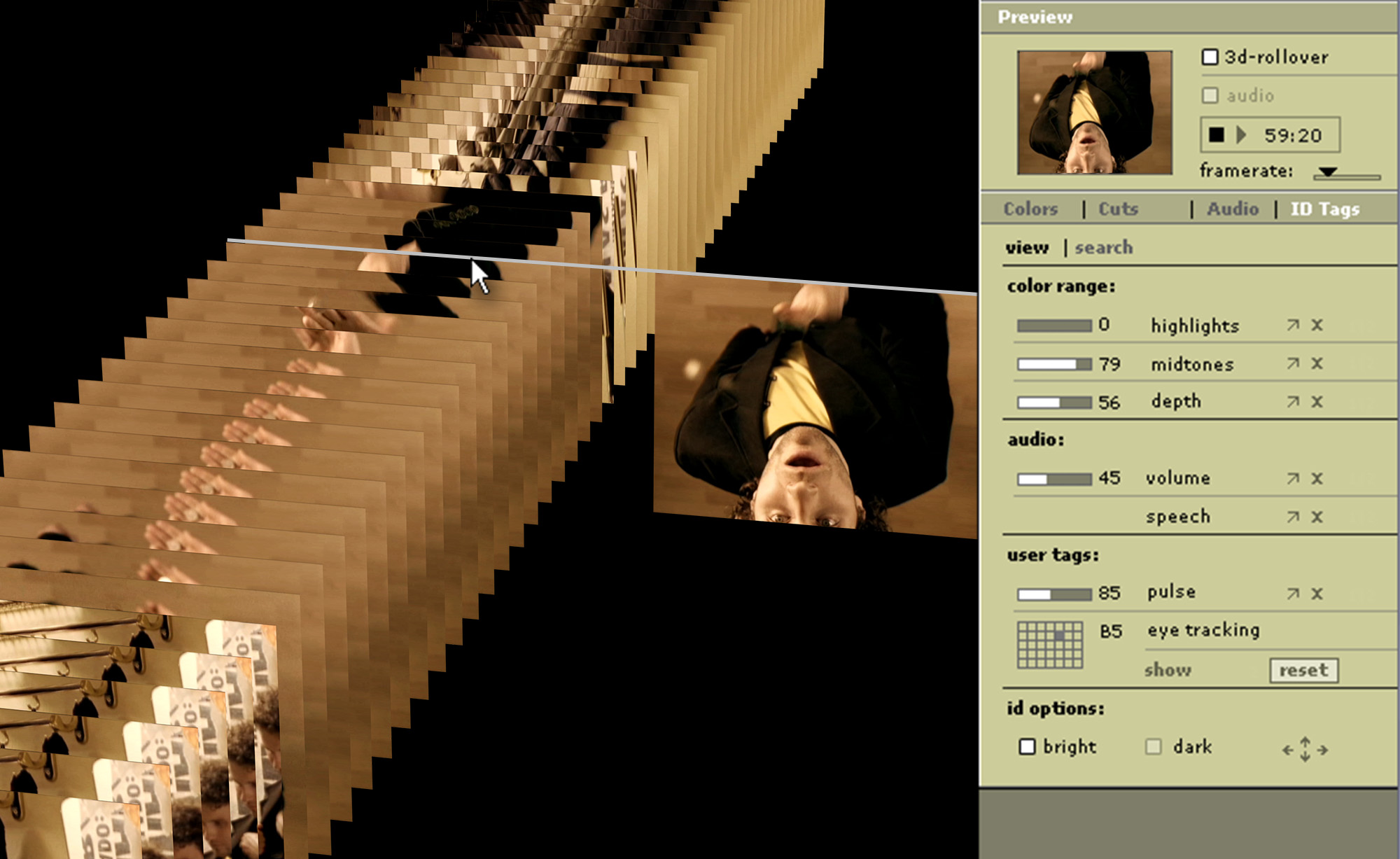

Recreating Movement is an experimental software tool for analyzing short film sequences. Originally developed in 2005 as a diploma thesis, the application breaks down video into individual frames and arranges them sequentially within a 3D space. The result is a compelling, tube-like structure that freezes a span of cinematic time.

The user can freely navigate through this sequence, exploring it from any angle within the 3D environment. An intuitive interface provides customizable settings and filters, creating a fascinating visual experience.

The project serves as a design-driven exploration–offering new ways to experience film–and should be seen as a developing concept, not a finished product.

Single Frames in Film Sequences

In traditional cinema, the illusion of motion stems from projecting still frames in rapid succession–typically 24 frames per second. The human eye cannot perceive each frame individually at this speed; instead, the brain merges them into a seamless flow, making the images appear to move naturally.

Arranging Frames in 3D Space

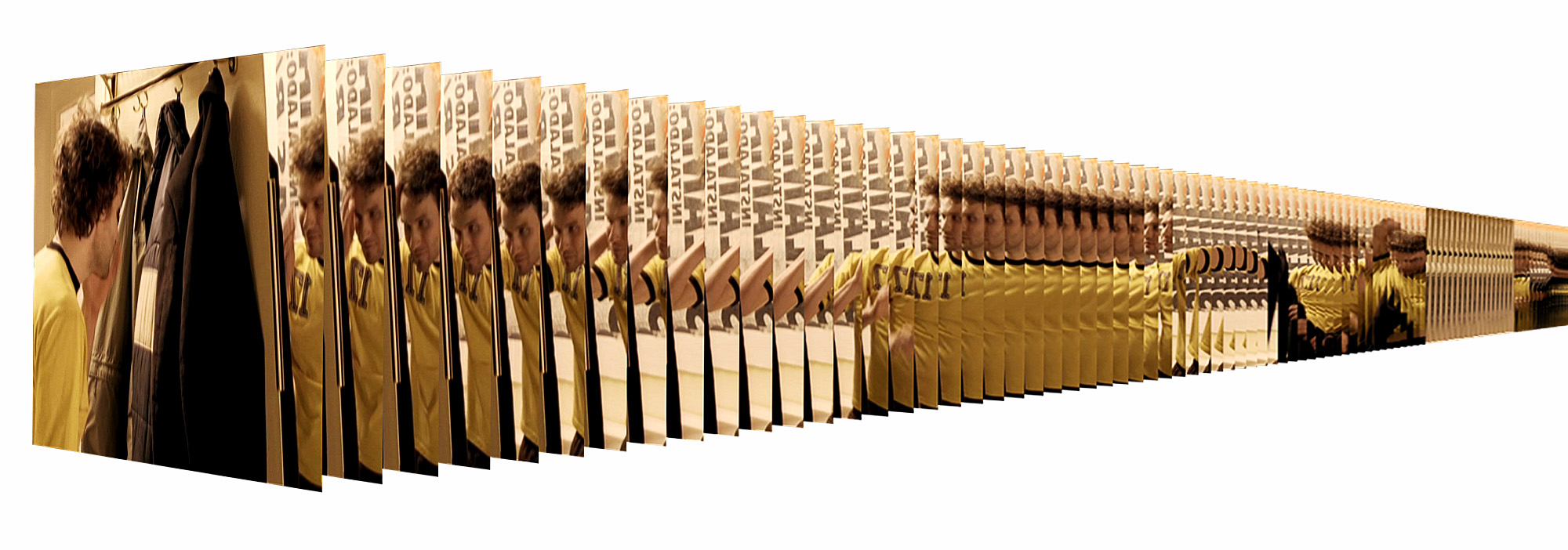

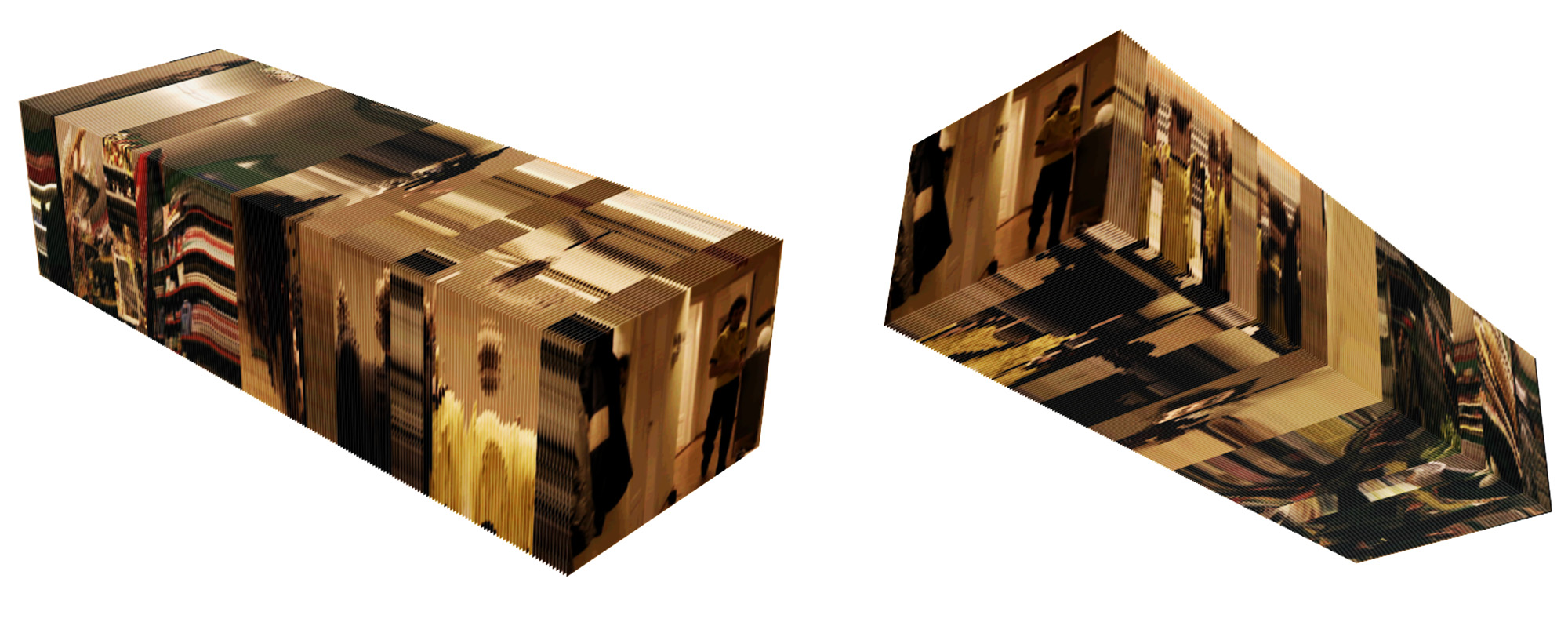

Each frame is extracted and placed sequentially within a 3D space, creating a spatial timeline. This arrangement turns the flow of time into a tangible visual structure–almost like sculpting motion itself.

Basic Functions

Navigation and Frame Distances

Keyboard controls allow users to navigate the 3D space from any angle, freely adjusting their viewpoint within the sequence. To focus more closely on particular frames, the distances between them can be increased or decreased as needed.

Orientation in 3D Space

To assist orientation in the 3D space, a floor grid can be displayed as a visual guide. A timeline scale runs alongside the sequence, marking both frames and seconds. Users can also customise the background colour to optimise contrast and enhance the visibility of the film content.

Play Modes

The film sequence can also be played in two distinct modes:

Play Mode 1: Frames move forward sequentially, with the front frame gradually fading out.

Play Mode 2: Frames start in reverse order. During playback, the front frame fades in and then shifts to the back as the next frame appears.

Preview Mode

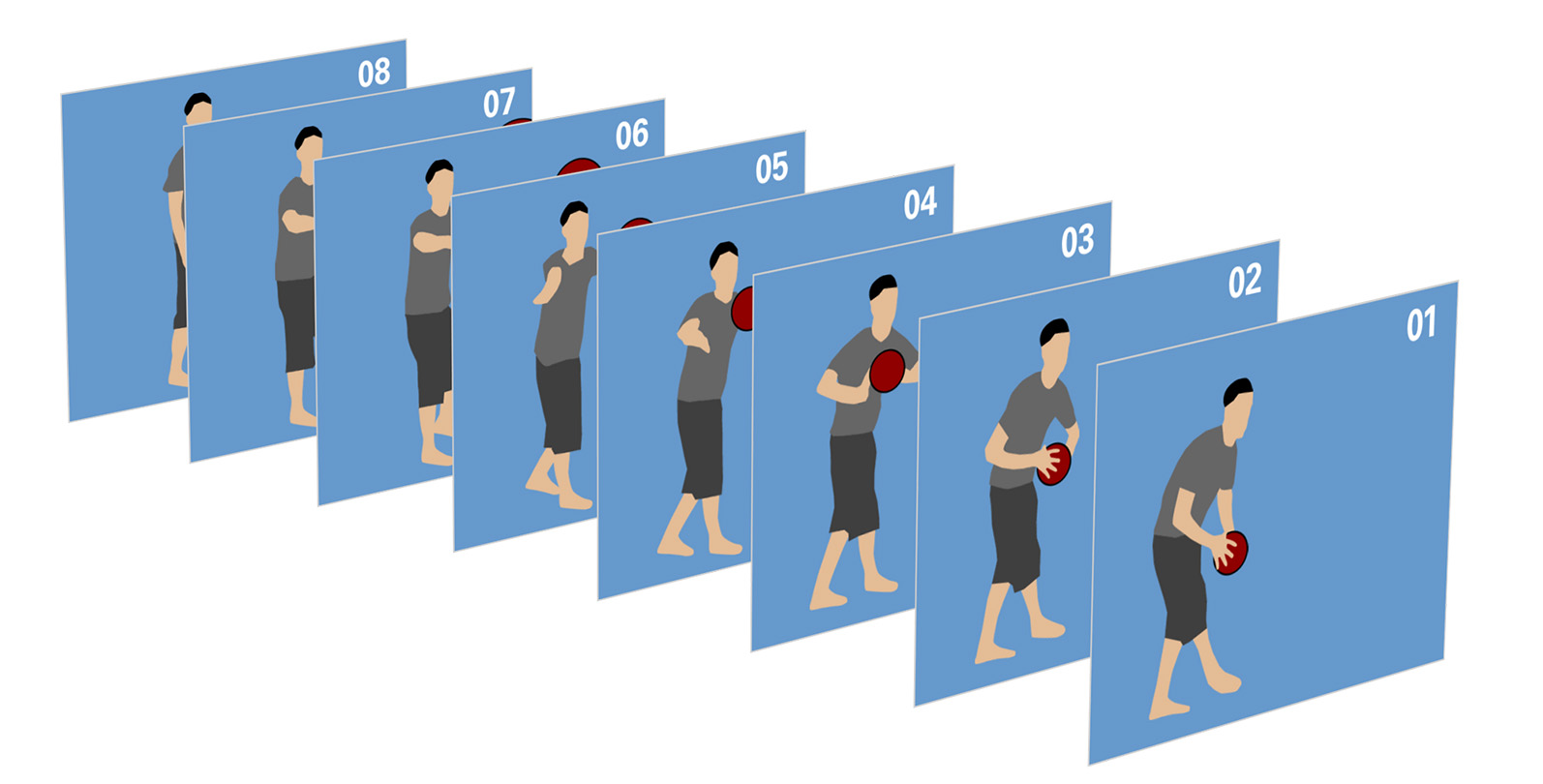

Preview mode allows direct interaction with individual frames in the 3D space. Hovering the cursor over a frame generates a duplicate alongside the sequence. This intuitive function makes browsing feel like flipping through the pages of a book.

Frame-by-Frame Selection

Users can freely select frames from a sequence, with the option to fade in the unselected portions for clarity. This setup enables a layered view of time: what is, what was, and what is about to unfold.

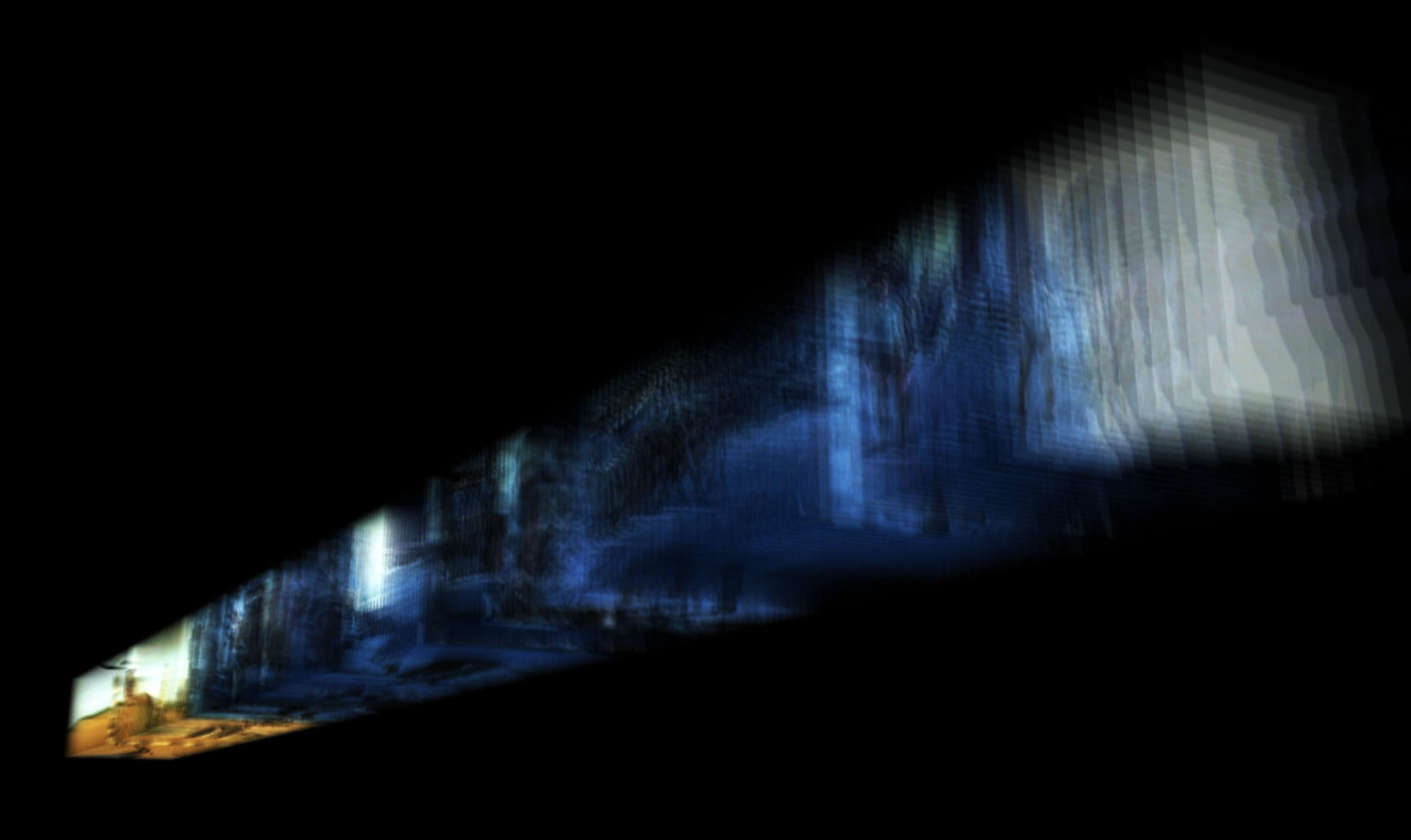

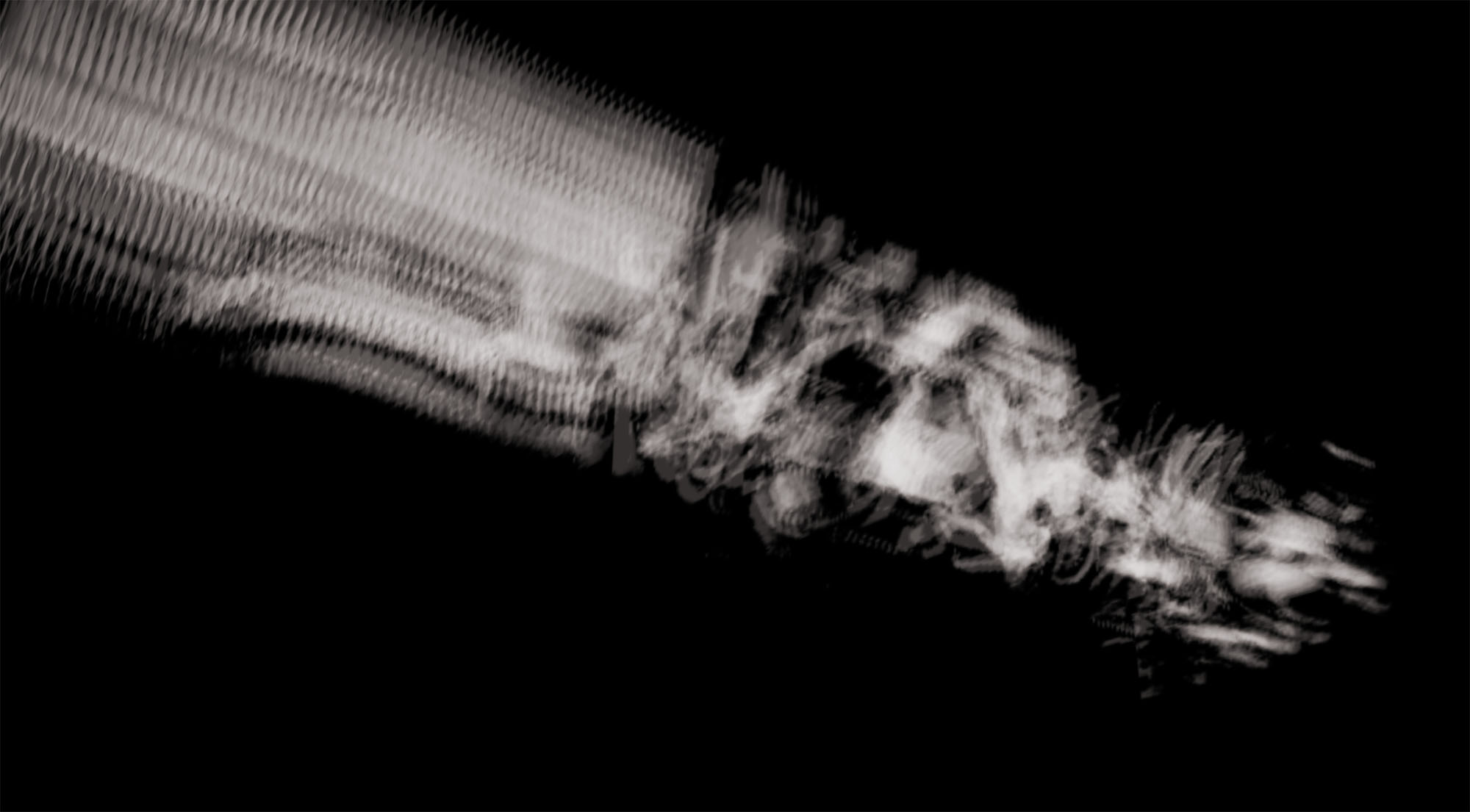

Adjusting Frame Transparency

Reducing frame transparency causes their box-like structures to blur into hazy, cloud-like forms. As outlines dissolve, internal colours and motion dynamics become more pronounced–revealing the film’s energy in a more organic, abstract way.

Extended Features

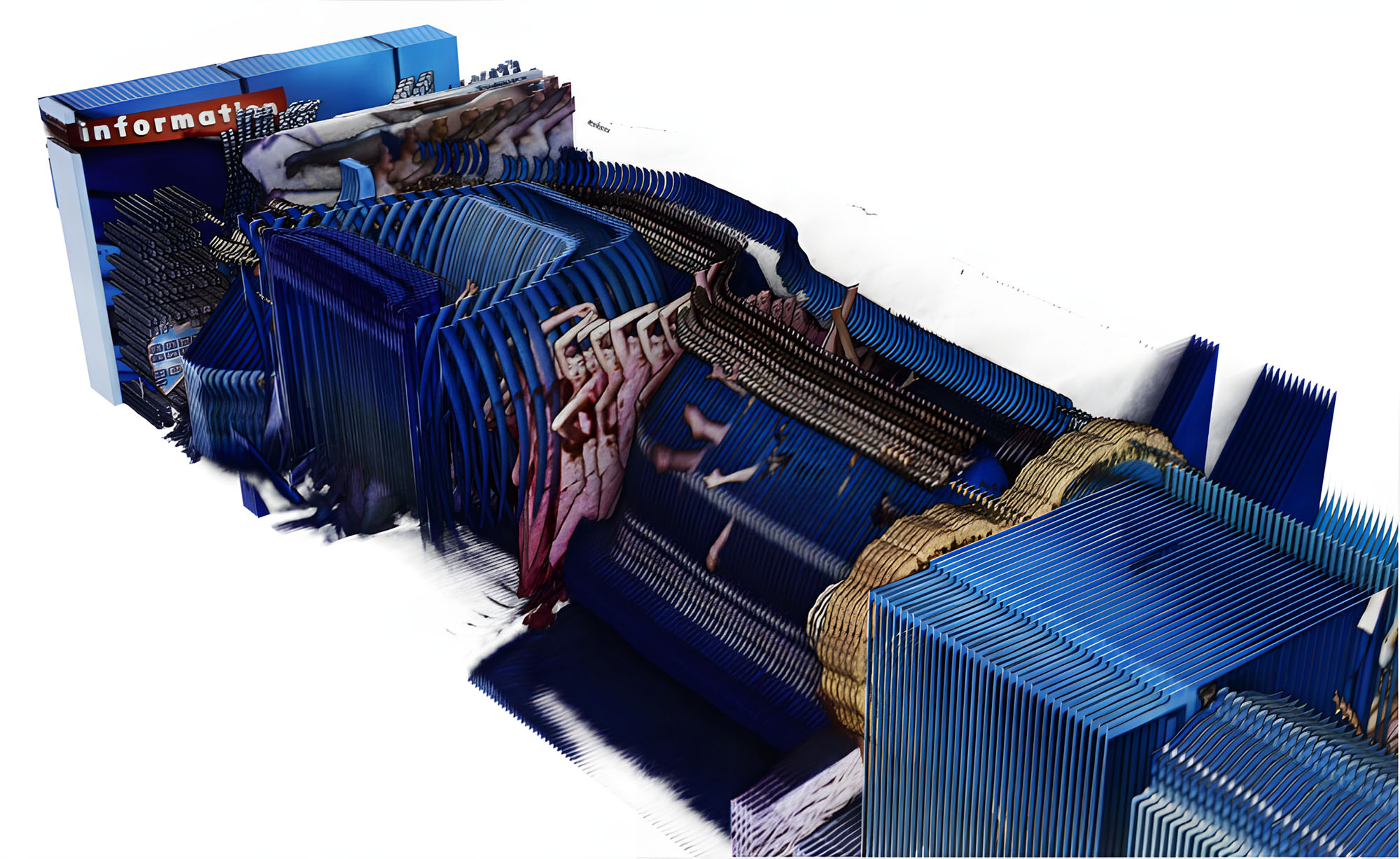

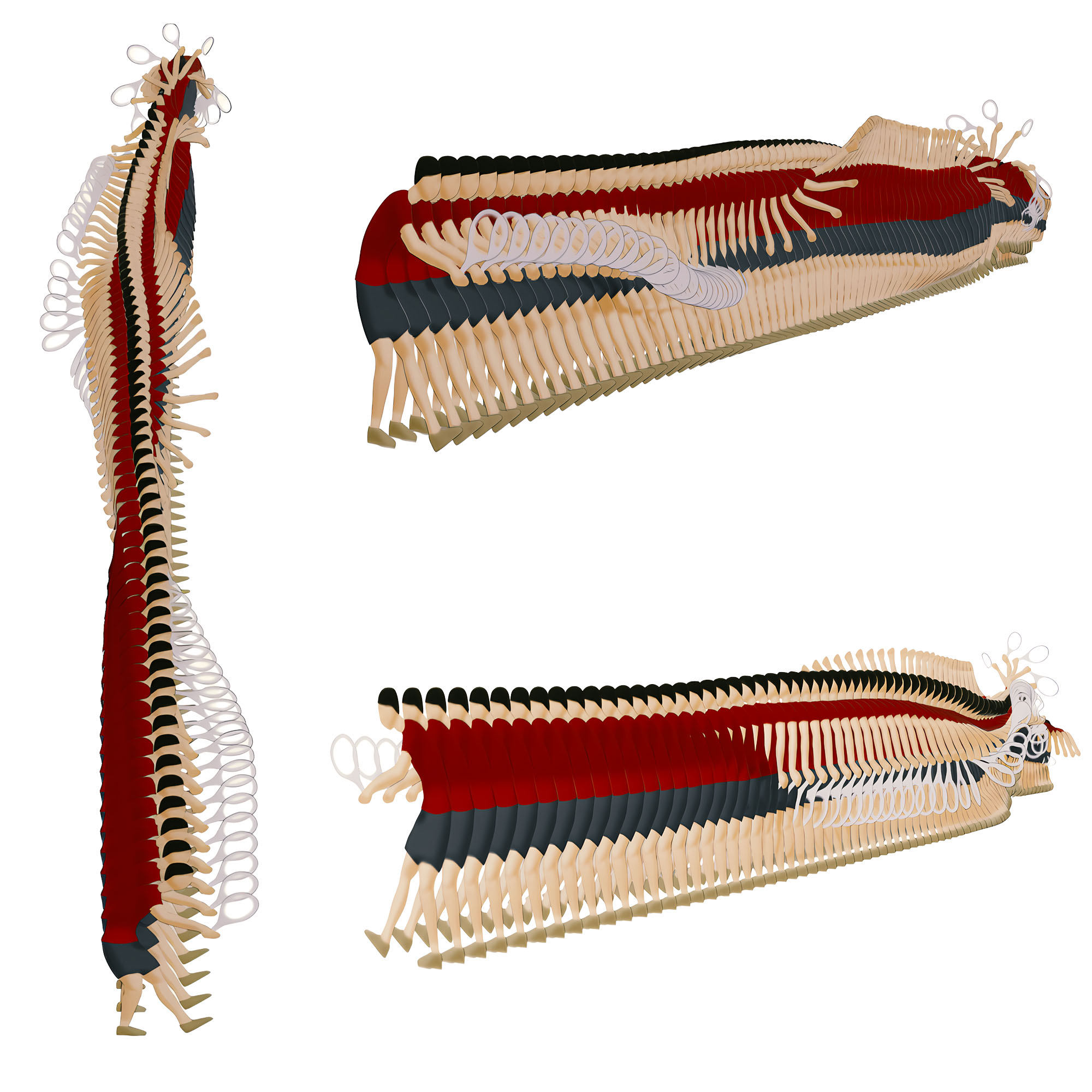

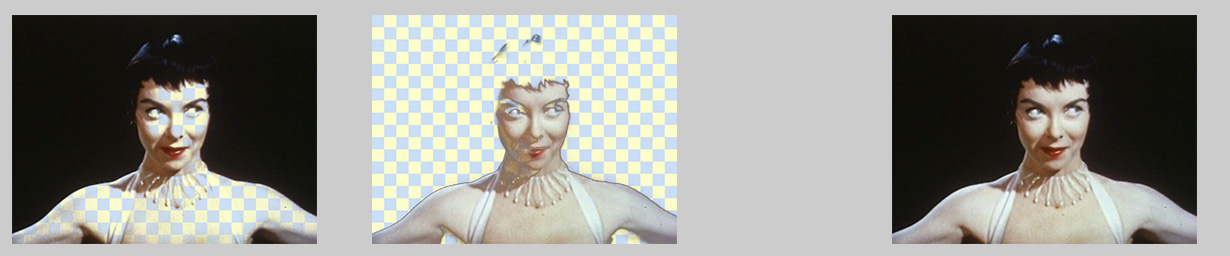

Colour Ranges: Highlight, Midtone, Shadow

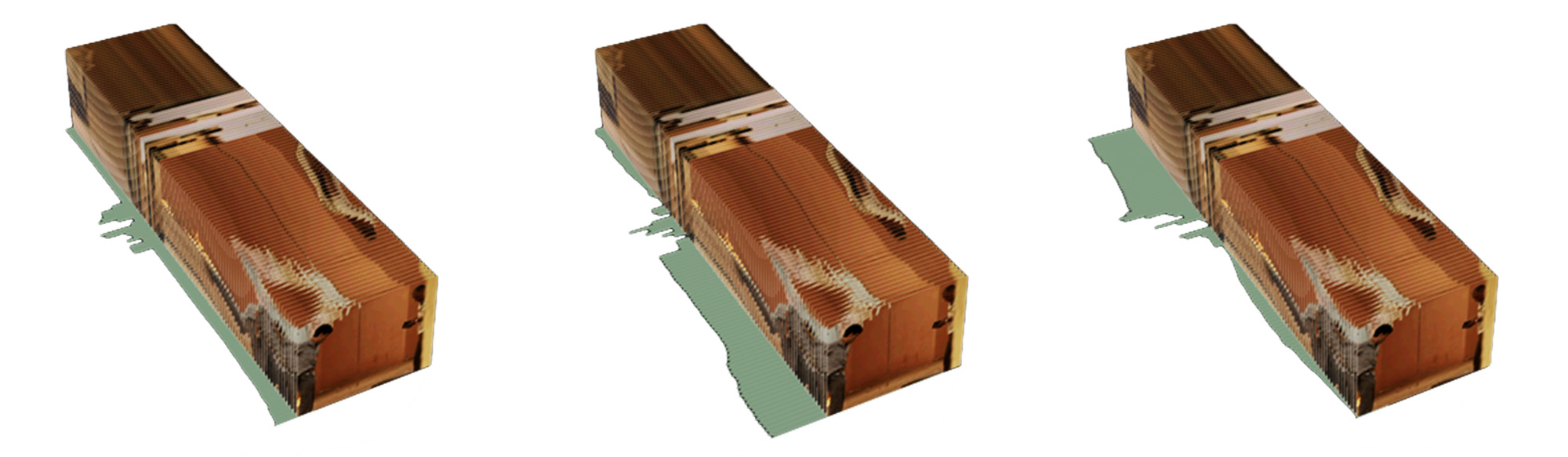

Each frame is analysed for its colour values, which are divided into three tonal ranges: highlights, midtones, and shadows. For each range, a version of the frame is generated showing only the relevant pixels–everything else is rendered transparent. This transformation dissolves the frame’s original rectangular shape, creating sculptural forms where contours, densities, and rhythms of the film become visually tangible.

Selective Colour Removal

Specific tonal elements–such as pure white or deep black–can be selectively removed, while other colour data remains intact. This makes shifts in elements like camera movements or tracking shots directly comparable, revealing subtle aspects of the film’s visual structure.

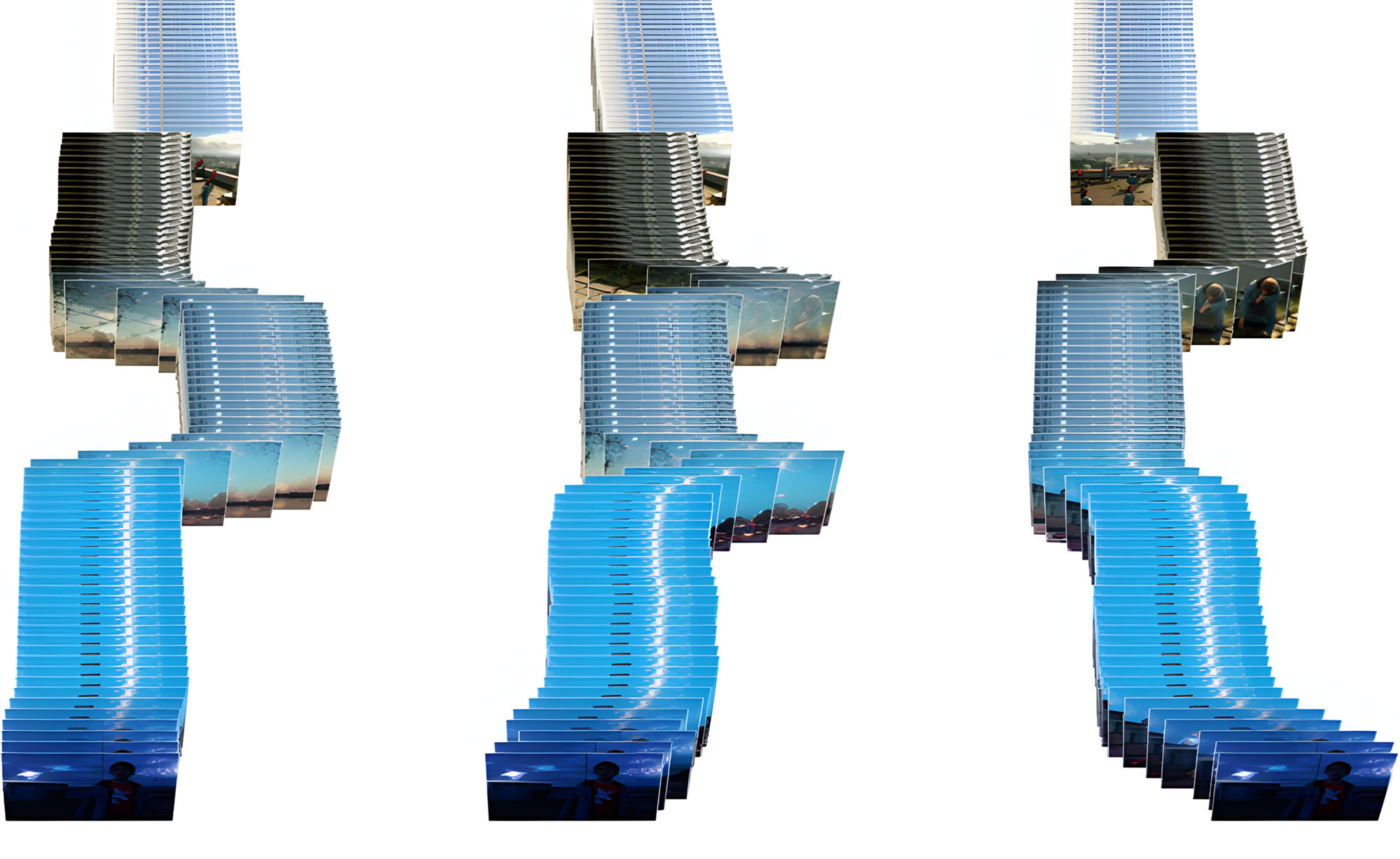

Colour-Based Frame Arrangement

As part of the colour analysis, each frame’s pixel count is calculated for highlights, midtones, and shadows. In arrangement mode, frames are repositioned along the horizontal axis based on these values–e.g., brighter frames shift further right when sorted by highlights. This visualisation provides a quick overview of how tonal patterns and dominant colour ranges develop across the film.

Visualising Film cuts

Arrangement mode can also visualise structural elements like film cuts. In this example, cut points are manually marked and aligned relative to a reference line–either horizontally or at the top. This produces a clear, ordered view of the film’s editing structure, with the first frame of each cut lined up in sequence.

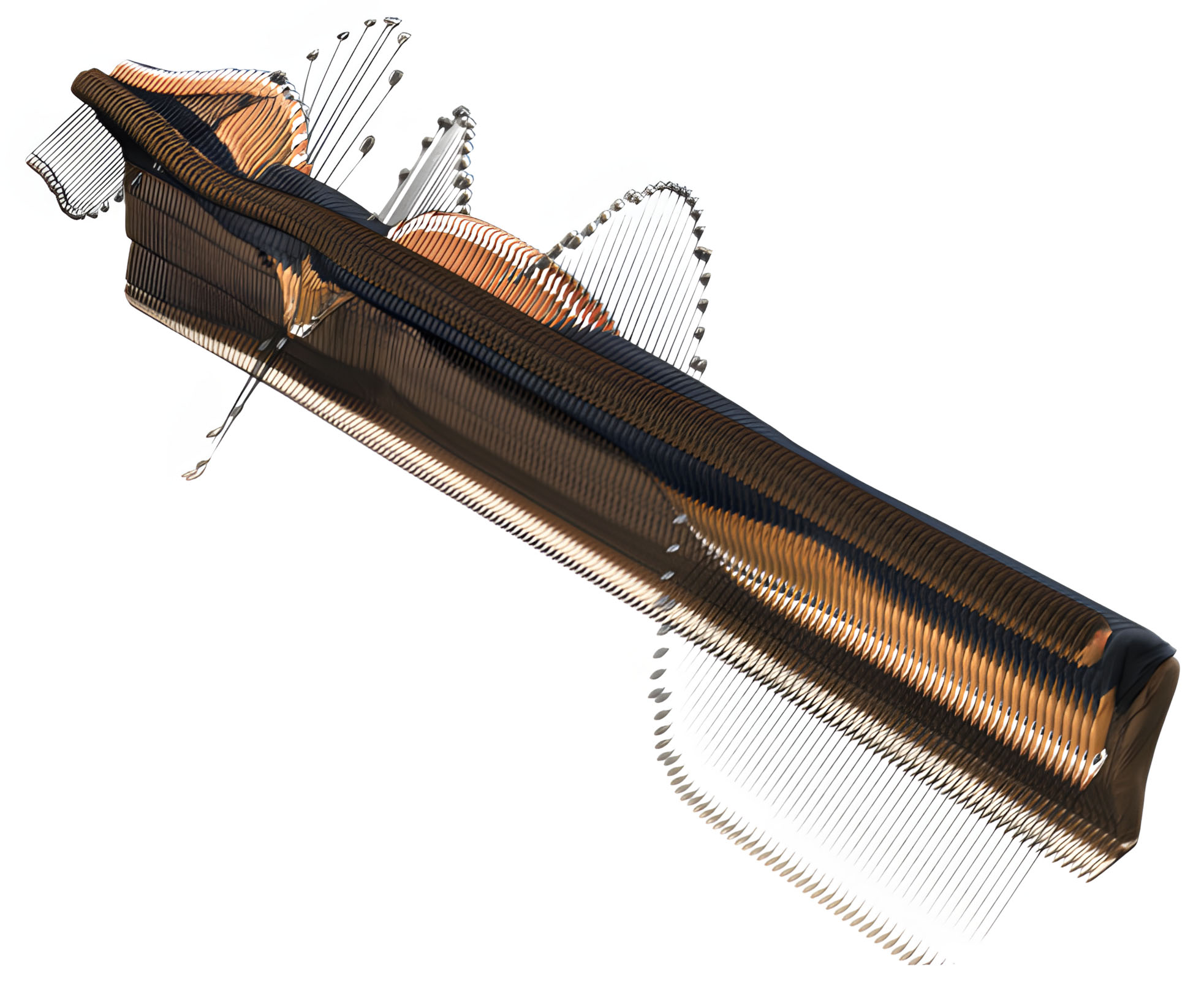

Audio Visualisation Techniques

Audio volume can be measured frame by frame and mapped proportionally to each frame’s height, width, or both–creating a visualisation of volume levels across time.

In a more experimental variation, audio frequency data is used to control frame rotation. When played, the sequence produces striking, sound-reactive visuals that shift dynamically with the audio.

Frame Metadata with ID Tags

Like MP3s with hidden metadata, each film frame contains digital ID tags–not about genre, but about measurable attributes like highlights, midtones, and audio volume. In Preview Mode, hovering over a frame reveals these values instantly.

Search Mode uses this data to locate visually or sonically similar frames, helping trace patterns across the film.

These tags can also be visualised in 3D space as floating horizontal bars, their widths reflecting the underlying values–turning abstract data into an intuitive visual landscape.

User-Generated Frame Tags

User ID tags represent an experimental concept based on capturing real-time data from the viewer. This might include measurements like pulse or facial expressions recorded via sensors or a camera. These values could be stored as ID tags and used to infer viewer habits or emotional states–potentially influencing how the film unfolds. The example shown is based on simulated data.

Eye-Tracking Gaze Mapping

An eye-tracking camera records the viewer’s gaze, overlaying the image with a grid to identify where attention is focused–for example, cell C5. This data is saved as an ID tag.

In this experimental setup, the eye-tracking data is simulated. When visualised in 3D, only the segments that correspond to these gaze points are shown, offering an abstracted view of visual attention across the film.

Examples of Use

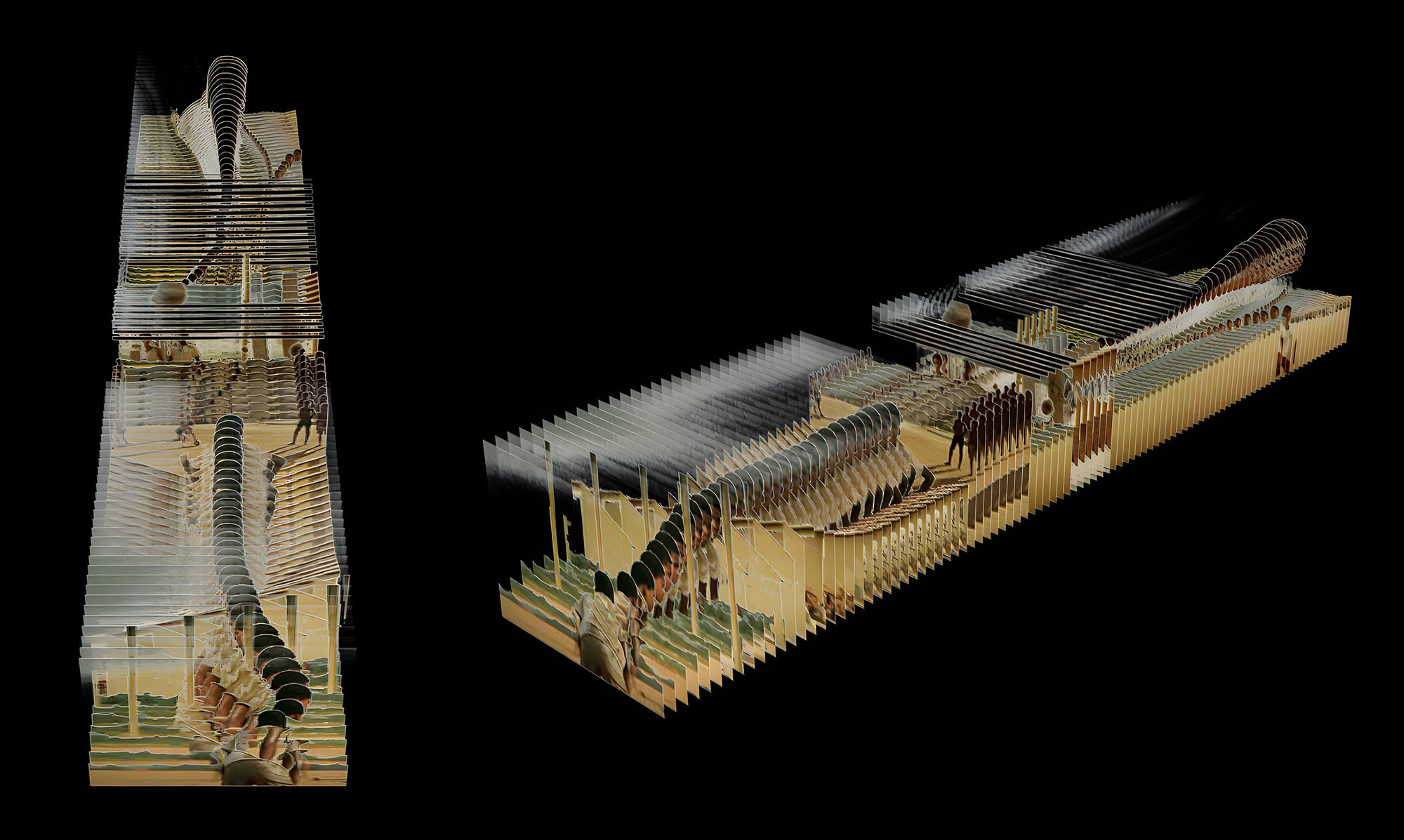

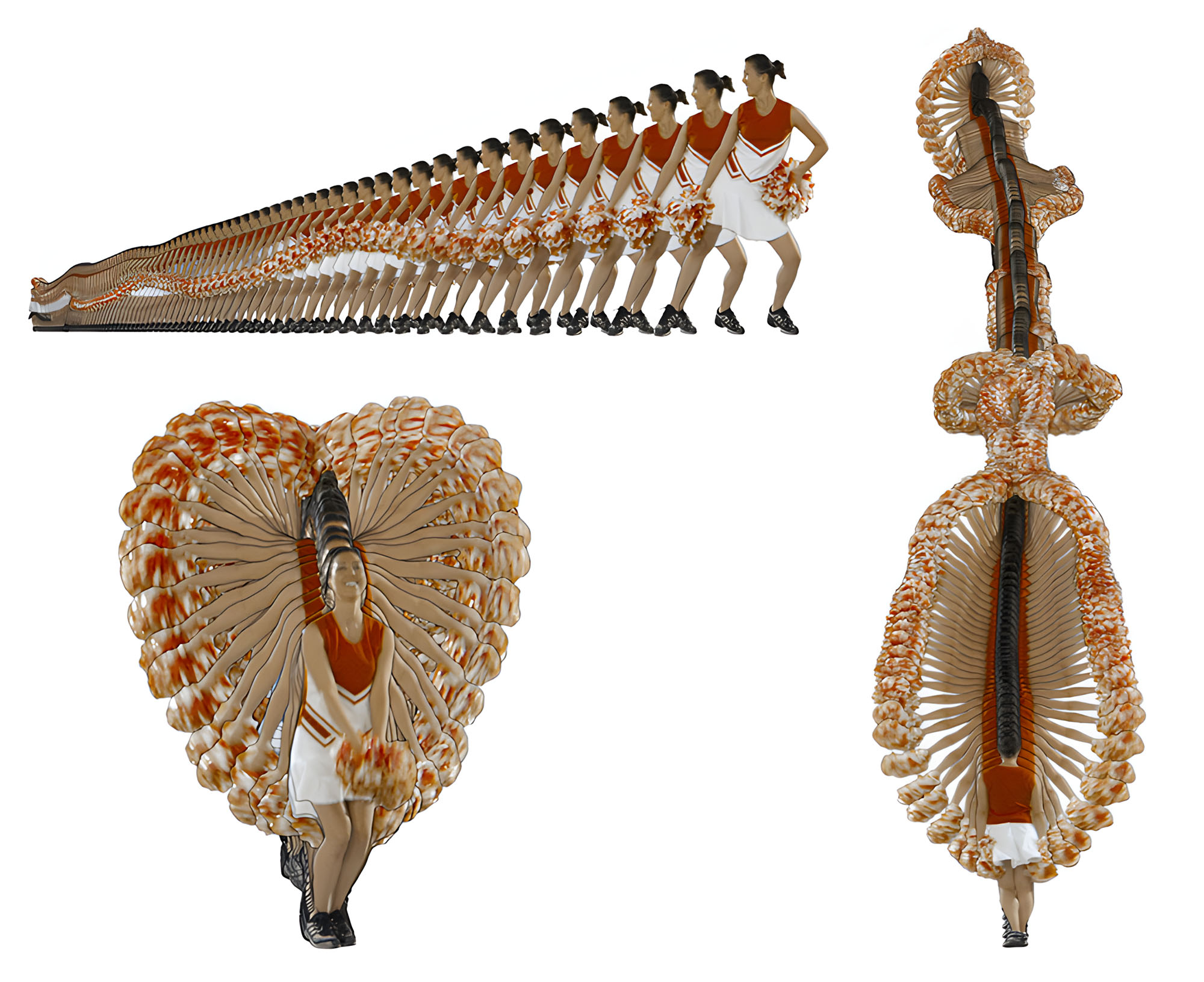

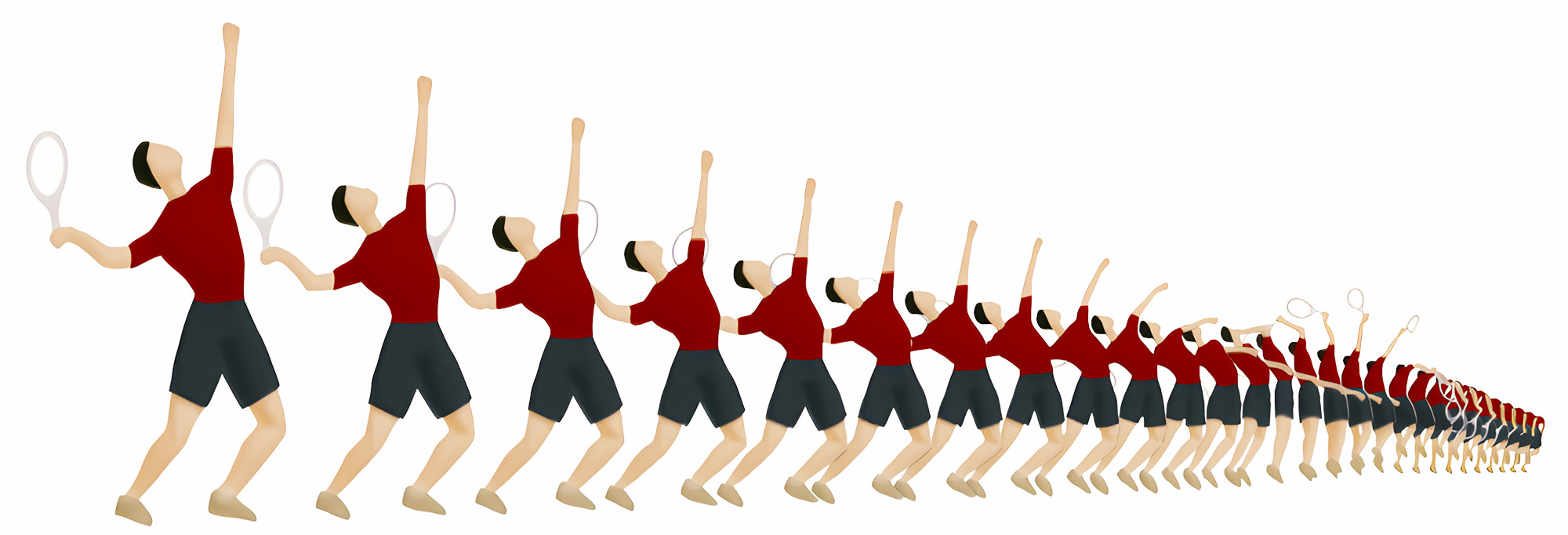

Extracting Motion Patterns

All previously shown examples are based on feature films–but it soon became clear that this tool’s potential goes far beyond the realm of entertainment. One particularly powerful feature is the selective extraction of frames, such as removing backgrounds, which opens new possibilities for visualising motion with more clarity and focus.

Even complex movements can be captured across their full duration and restructured into visual data. This makes motion patterns not only visible but also analyzable–revealing the hidden logic and dynamics of movement in a way that's both accessible and insightful.

Motion Study: Tennis Serve

This visualisation shows a tennis player’s serve and offers a valuable tool for sports science. Multiple sequences can be loaded and compared, making differences in speed, position, or rhythm easy to spot.

Movements are broken down frame by frame, allowing detailed analysis over time and space. ID tags can include biomechanical data–like joint angles or body alignment–turning visuals into measurable performance metrics.

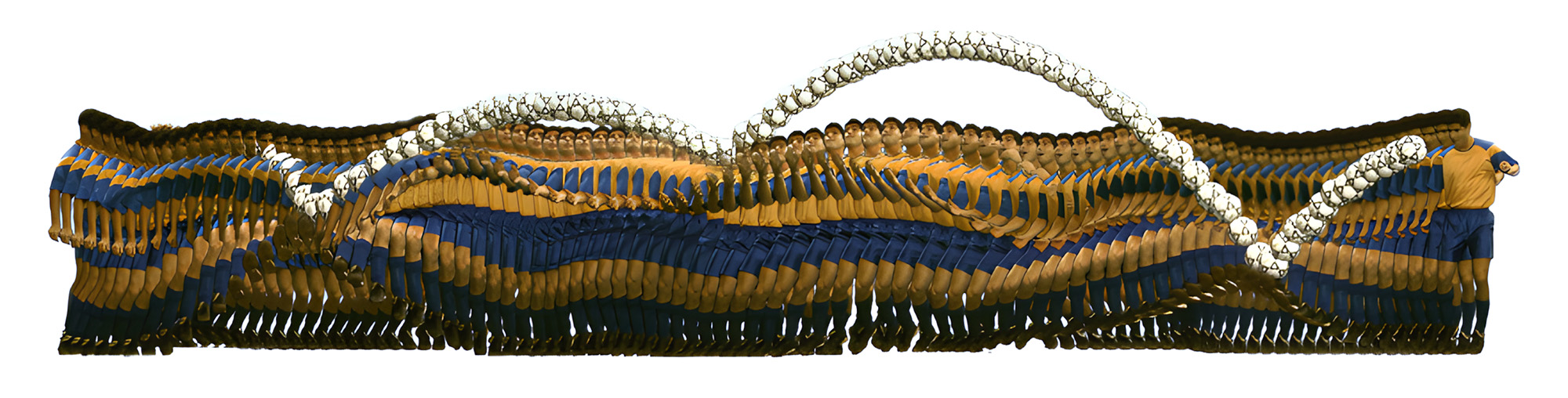

Moframes- Visualising Motion Complexity

This approach to visualising motion sequences evolved into a dedicated project called MoFrames. It breaks down and reassembles movement into forms that reveal hidden rhythms, directional shifts, and spatial interactions–offering new ways of seeing and understanding motion.

Crash Test Visual Comparison

Comparing two parallel video recordings–such as crash tests–can be visually overwhelming. Action unfolds too quickly for the eye to process. By using this tool and filtering out background elements, the visual data is reduced to essentials, highlighting relevant motion and simplifying analysis.

Differences in deformation become instantly visible. Time and image are synchronised, making changes traceable frame by frame. Adjusting frame intervals or stepping through the sequence reveals deeper nuances.

Tailoring test conditions to the tool–such as using colour codes on vehicle parts or dummies–can enhance precision. ID tags can store sensor data, offering a detailed and quantitative view of the deformation process.

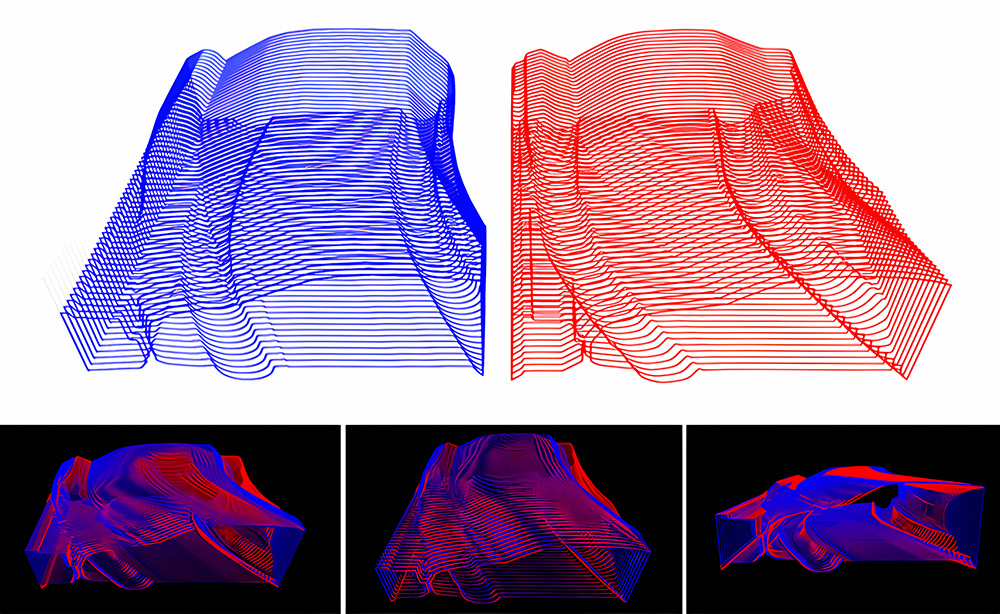

Crash Test Outline Mapping

Another display mode shows only the vehicle outlines, each rendered in a distinct colour. Overlaid, these outlines highlight structural differences that become clearly visible during playback.

About

Recreating Movement- tools for analyzing film sequences

This project was presented as a diploma thesis by Martin Hilpoltsteiner in July 2005 at the University of Applied Sciences Würzburg, Germany (Department of Communication Design).

Examiners: Prof. Erich Schoels, Prof. Kerstin Stutterheim.

Idea, Concept, Design, Coding: Martin Hilpoltsteiner

Used Footage

Metropolis – Fritz Lang (Friedrich Wilhelm Murnau Stiftung)

Crashtest footage (EURO NCAP)

Sport footage (ribbit films)

Simons Ranch – Tina Eckhoff

Ich rette das Multiversum – Ulf Groote

FAQ – beige GT (Brock, Hudl)

Design for Dreaming (archive.org)

Awards

Designpreis Deutschland – Nominee

Lucky Strike Junior Design Award – Winner

Bayerischer Staatspreis für Nachwuchsdesigner – Winner

IF Award, Kommunikationsdesign – Winner

TTA Top Talent Award – Winner

Exhibitions

ZKM – Mind Frames (2006)

Technical Museum Vienna (2006)

New Museum für Art and Design Nuremberg (2006)

Entry 2006 Essen Zollverein

IHK Munich (2006)

Designale der Heim und Handwerk Munich (2006)

Contact

Martin Hilpoltsteiner | Selected Projects Portfolio

info@moframes.net